Containers And Container Images

Container images encapsulate a service executable along with the libraries and files it needs, and containers provide a way to run many services isolated from one another. That’s why container images and containers are suited perfectly to serve as the basic building blocks for a microservices-based application.

Microservices are everywhere, and they have come to stay. In this blog post, we’ll examine their fundamental building block: container images and containers.

- Why Container Images?

- The Docker Image Format

- The Pitfalls Of Filesystem Layering

- Multi-Stage Image Builds

- Running A Container

- Wrap-Up: Containers Are Awesome!

Why Container Images?

The definition we’ve introduced for the term microservice architecture in the previous blog post doesn’t say anything about technology, and indeed, to build some microservices, you could probably use any technology you feel comfortable with. But because container images provide the required level of encapsulation, they are very well suited for that purpose.

When building microservices, an organization will typically structure its development teams such that each team can work on one service (or one set of related services), reducing communication and organization overhead between teams since each team can operate largely autonomously. But this organizational benefit will turn into a nightmare if the services the teams produce have dependencies and don’t encapsulate those dependencies well – the teams would have to coordinate on the versions of programming languages and operating system libraries used whenever the services they implement are supposed to run in the same environment. Without proper dependency encapsulation, such teams will be coupled to one another in terms of their technology decisions, eliminating one important benefit of the microservice architecture.

Container images solve this problem by encapsulating everything an application needs in order to run (external dependencies like a data store cannot simply be encapsulated in the image itself, of course – we’ll see in the upcoming blog posts that each microservice built on top a container image will get its own datastore, but that’s beyond the scope of the current blog post). Using container images, the services produced by each team are completely decoupled (apart from the APIs they use to communicate, of course), and this means teams can operate truly independently. Additionally, container images are immutable, so once built, they are incredibly easy to deploy and distribute.

The Docker Image Format

Container images are binary packages that encapsulate not only the application executable, but also the entirety of files, libraries, and other dependencies this application requires inside a single operating system container. They are therefore a kind of blueprint from which instances of the application they encapsulate can be created in the form of containers.

The most popular format for such images is the Docker format. Docker images are built around the idea of an overlay filesystem – each image consists of multiple filesystem layers, and each layer adds, modifies, or removes files from the preceding layer. The Dockerfile is used to control which filesystem layers should exist and which actions to run on them in order to build an image.

The following is a (very simple) example of a Dockerfile:

# Define base image

FROM python:alpine3.12

# Add Python script to root directory within image

ADD hello_container_service.py /

# Run Python script

CMD ["python", "./hello_container_service.py"]

A full list of Dockerfile commands is available from the official Dockerfile reference.

The Pitfalls Of Filesystem Layering

Each layer in a Docker image is actually just a description of the delta to the previous layer. This is very efficient in terms of storage and transmittal, but – no free lunch! – entails two counterintuitive drawbacks.

What’s Gone Is Not Really Gone

Let’ consider the following filesystem layers:

.

|_ layer A: contains some very large file

|_ layer B: removes that large file

|_ layer C: <does more things>

Layer B being only a description of the delta to layer A doesn’t actually remove anything from the latter. All images in one way or the other built on top of layer A will always contain the large file.

This also has security implications: If you put a secret into a layer, it will be present in all subsequent layers and can be accessed using the right tools. Therefore, it’s a very, very bad idea to put anything secret into a Docker image!

Necessity To Rebuild After Change

Whenever something changes in a layer, all subsequent layers have to be rebuilt, repushed, and repulled, too. Take a look at the following example:

.

|_ layer A: some base image for Java

|_ layer B: adds Java source files

|_ layer C: installs some packages

Whenever the source code changes, layer B has to be rebuilt, and because layer C depends on it, it has to be rebuilt as well. This is not optimal – imagine a CI/CD server like Jenkins that automatically builds images and pushes them to a remote registry upon changes in some source code repository, and a container orchestration framework – like Kubernetes – listening for new images to update running containers. Both the CI/CD server and the container orchestration framework have to push (or pull, respectively) two filesystem layers rather than just one, so the deployment process will take a lot longer.

That’s why you’ll want to structure your image layers from least likely to change to most likely to change. An improved version of our example would therefore add the Java source files after the additional packages have been installed:

.

|_ layer A: some base image for Java

|_ layer B: installs some packages

|_ layer C: adds Java source files

Multi-Stage Image Builds

To resolve the problem of unnecessarily large container images, Docker introduced the concept of multi-stage builds. They help us make sure the final image contains only what’s truly required to run the application executable and doesn’t needlessly carry around tools that may have been required previously to generate that executable.

Single-Stage Example

For example, consider the following Dockerfile (you can find this file along with a small sample application here):

# Use base image containing both Maven and a JDK

FROM maven:3.6.3-openjdk-14-slim

# Create and switch to 'maven' directory

WORKDIR /maven

# Copy everything required for the build into the image

# (Unnecessary stuff ignored by means of the '.dockerignore' file)

COPY . .

# Build the artifact

RUN mvn clean package

# Switch to 'target' folder and run jar file

WORKDIR ./target

CMD ls | grep -e "jar$" | xargs java -jar

It copies some source files into the image and then runs a mvn clean package in order to build the artifact. We can build the image using the following command:

$ docker build -t example:fat -f DockerfileFat .

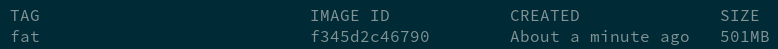

This works, but the Dockerfile is called DockerfileFat for a reason. Take a look at the image size:

The image weighs in at a whopping 500 MBs! Surely, employing a multi-stage build, we can do better than that.

Multi-Stage Example

The basic idea is to separate the task of building the artifact from the task of running it. So, the first stage will contain all tools necessary to do the build, and the second stage will encapsulate only what’s necessary to run the artifact plus the artifact itself.

Here’s how the Dockerfile looks if we split up our Java example into two stages:

# Stage 1: Build -- same heavy-weight image as before

# This stage can later be referred to using the name 'build'

FROM maven:3.6.3-openjdk-14-slim as build

# Same as before

WORKDIR /maven

COPY . .

RUN mvn clean package

# Stage 2: Run -- much more light-weight, only contains a JRE

# No name for this stage since we don't have to reference it later on

FROM adoptopenjdk:14.0.2_8-jre-hotspot-bionic

WORKDIR /app

# Copy the artifact from the build stage...

COPY --from=build /maven/target/*.jar .

# ... and run it

CMD ls | grep -e "jar$" | xargs java -jar

The image for the build stage contains all required build tools – Maven and JDK 14. The resulting artifact is copied to the second stage, which is only responsible for running it. Therefore, we can use a much more light-weight base image that only contains a JRE.

Using the following command, we can build the image:

$ docker build -t example:slim -f DockerfileSlim .

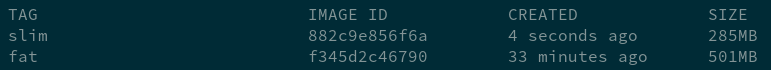

The effort was well worth it; there’s a noticeable size difference compared to the first image:

Running A Container

Finally, let’s put our newly acquired knowledge to good use and run a container. We’ll reuse an example from earlier to demonstrate this:

# Define base image

FROM python:alpine3.12

# Add Python script to root directory within image

ADD hello_container_service.py /

# Run Python script

CMD ["python", "./hello_container_service.py"]

You can find both this Dockerfile and the source code for the sample script in this GitHub repository. The script exposes a simple web server on port 8081 and responds with a simple greeting to any incoming GET requests.

We’ve already built two images earlier in this post, but this time, we’ll use a tag that points to the DockerHub registry:

$ docker build -t antsinmyey3sjohnson/hello-container-service:1.0 .

One of the great benefits of container images is that they can be shared so easily using a remote registry. The following command will publish our image to DockerHub:

$ docker push antsinmyey3sjohnson/hello-container-service:1.0

And from there, we can run it like so:

$ docker run --name hello-container-service --rm -t -p 8081:8081 antsinmyey3sjohnson/hello-container-service:1.0

Here, the --rm parameter takes care of removing the container and its file system once the container exits, -t allocates a pseudo TTY for us that catches all output the Python script inside the container prints to STDOUT, and -p forwards our localhost’s port 8081 to the container’s port 8081, where our script is listening for requests.

Querying localhost:8081 yields the following output:

$ curl localhost:8081

{"message": "Hello, container!"}

The beauty of this is that anyone with an internet connection and a running Docker daemon is able to execute this simply by using the run command above – if the image is not available locally, your Docker daemon will automatically download it from the given registry, and then run the container.

Wrap-Up: Containers Are Awesome!

Container images are an incredibly useful tool to encapsulate application code and its dependencies. As such, they are the perfect foundation for building an application on top a microservice architecture – each microservice is baked into its own image and run inside its own container.

There are two pitfalls when handling container images due to the layered filesystem approach of the Docker image format, but building container images is nonetheless pretty straightforward, and thanks to Docker’s multi-stage build feature, we can make sure an image’s content is restricted to only the things the application it encapsulates really needs.

Docker makes it also very easy to run container instances from existing images, and remote public registries can be used to store those images and share them globally with anyone (or only a very restricted set of people in case of private registries.)

Container images and containers become even more powerful when combined with a container orchestration framework, such as Kubernetes. That’s why in the upcoming blog post, we’ll create a Kubernetes cluster on a public cloud and run our first container on it.